Table of Contents

1.0. Introduction

As artificial intelligence (AI) becomes increasingly integral to various industries, including lifesciences and finance, the safe, ethical, and continuous validation of AI has emerged as a top priority. Continuous Lifecycle Governance (cLCG) is gaining recognition as a foundational framework to address these challenges. This article delves into the significance of cLCG for the success and sustainability of AI products and provides an overview of how this governance approach operates throughout the AI lifecycle.

“You will need Continuous Governance built into any AI app that is deployed in the GxP arena. We have partnered with IBM to ensure all our AI models are continuously validated leveraging watsonx.gov. Our mantra is not just validation but continuous validation in everything we provide.”

2.0. Why cLCG is Essential

AI is dynamic and ever-evolving from the moment an AI system is conceived to its deployment and ongoing use, its nature and impact change. This evolution introduces potential risks and necessitates a governance model that can adapt accordingly.

2.1. Ethical Concerns and Trust

AI decisions can have significant regulatory and ethical implications, particularly in sensitive areas like GxP manufacturing. Ensuring fairness, transparency, and accountability is vital for maintaining QA’s trust and preventing biases or unintended harm.

2.2. Regulatory Compliance

The global regulatory landscape is increasingly imposing stringent guidelines for AI deployment, such as the EU’s AI Act and GDPR. Continuous governance enables organizations to adapt to evolving regulations, helping them avoid costly penalties, operational disruptions, and reputational damage. Organizations with established governance systems are better prepared for audits and external scrutiny.

For GxP apps that leverage AI, you have only one option: integrated continuous governance.

2.3. Mitigation of Risks

AI models can drift over time due to changing data patterns, operational anomalies, or cyber threats. Without effective monitoring, minor issues can escalate into significant problems, such as biased outputs or security vulnerabilities.

Closed models are preferred for highly regulated areas. Even in such cases, cLCG ensures that risks are identified and mitigated early, protecting both the system and its stakeholders.

2.4. Maximizing Value

AI systems represent substantial investments that require ongoing optimization to remain relevant and effective. Governance protocols support the long-term alignment of AI systems with business objectives, user expectations, and market demands, ensuring that models continue to provide accurate, actionable, and meaningful insights.

3.0. How cLCG Works

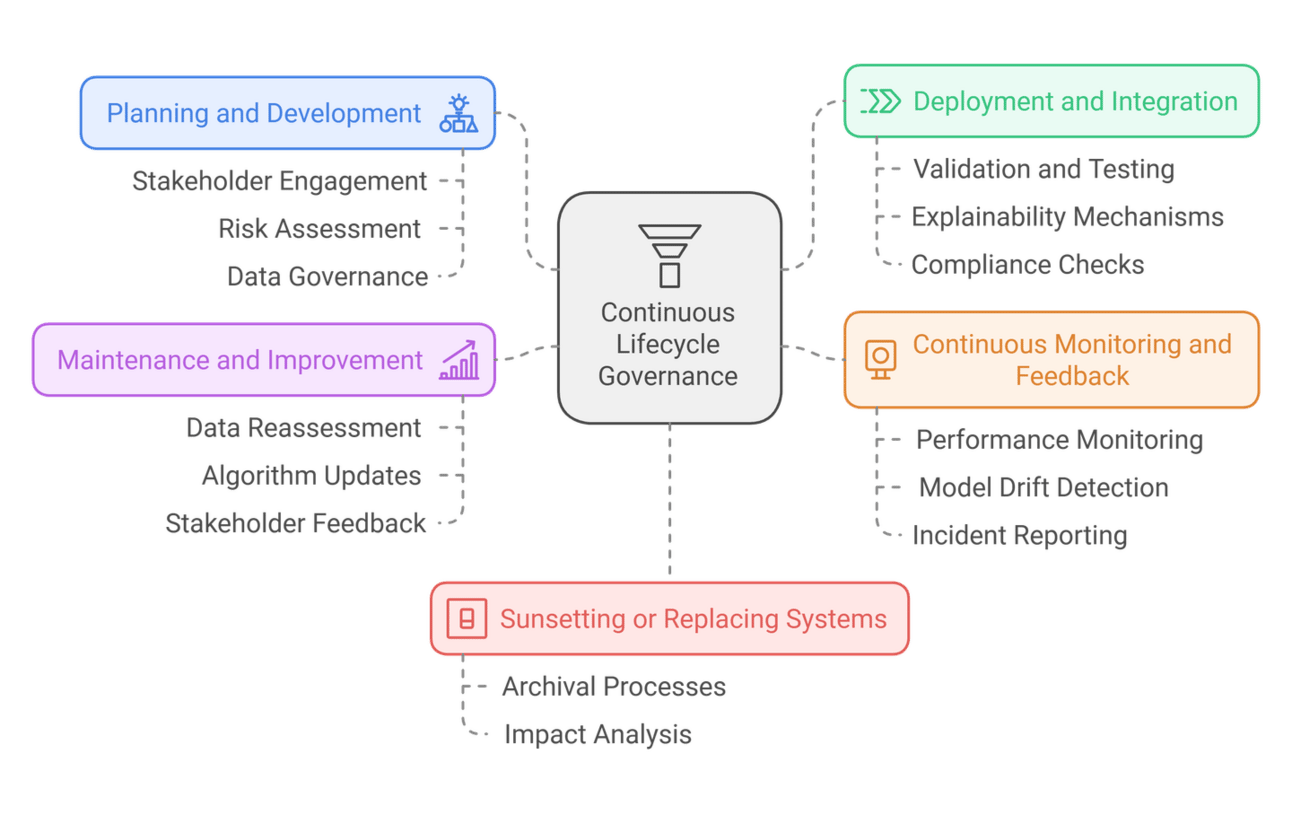

Implementing cLCG involves integrating governance protocols throughout the AI lifecycle.

Here’s a detailed breakdown of its key components:

3.1. Planning and Development

At the inception stage, governance begins with defining clear objectives and ethical guidelines. This involves:

Stakeholder Engagement: Collaborating with diverse teams to align on goals and risks.

Risk Assessment: Identifying potential biases, vulnerabilities, and societal impacts.

Data Governance: Ensuring high-quality, unbiased, and representative datasets.

Governance at this stage lays a solid foundation for transparency and accountability in AI systems.

3.2. Deployment and Integration

As models move into production, governance protocols focus on ensuring operational integrity:

Validation and Testing: Conducting rigorous testing to verify that the model performs as intended across various scenarios on an ongoing basis.

Explainability Mechanisms: Incorporating tools that make AI decision-making transparent for both technical and non-technical stakeholders.

Compliance Checks: Ensuring that all regulatory and ethical requirements are met prior to deployment.

3.3. Continuous Monitoring and Feedback

Once deployed, governance does not cease; it intensifies:

Performance Monitoring: Tracking metrics such as accuracy, fairness, and efficiency to ensure consistent performance on a continuous basis.

Model Drift Detection: Identifying and addressing deviations in behavior due to evolving data patterns.

Incident Reporting: Establishing a feedback loop to capture anomalies, user complaints, or ethical concerns.

3.4. Maintenance and Improvement

AI systems require periodic updates and recalibration to maintain effectiveness:

Data Reassessment: Ensuring datasets remain relevant and representative over time.

Algorithm Updates: Implementing new techniques or insights to enhance model performance.

Stakeholder Feedback: Regularly engaging stakeholders to refine governance policies based on real-world use.

3.5. Sunsetting or Replacing Systems

When an AI system becomes obsolete or is replaced, governance protocols ensure a responsible transition:

Archival Processes: Safeguarding historical data for future reference or audits.

Impact Analysis: Assessing the broader effects of retiring an AI system, including data dependencies and user disruptions.

4.0. Challenges in Implementing cLCG

While cLCG offers numerous benefits, its effective implementation requires overcoming several challenges:

Resource Intensity: Continuous monitoring and updates demand dedicated resources and skilled personnel. xLM’s guardrails for GxP provides a validated solution.

Interdisciplinary Coordination: Effective governance necessitates collaboration among data scientists, legal/regulatory experts, ethicists, and business leaders.

Technological Complexity: Ensuring explainability and interpretability in complex models, such as neural networks, can be daunting. xLM’s data scientists build, test and validate highly complex ML models.

Scalability: As organizations deploy more AI systems, scaling governance frameworks without compromising effectiveness becomes critical. xLM has partnered with IBM to deliver cLCG that is infinitely scalable.

5.0. Conclusion: Paving the Path for Continuously Validated AI

cLCG is more than just a set of policies—it embodies a mindset that prioritizes responsibility, adaptability, validatability and long-term value in AI systems. By embedding governance into every stage of the AI lifecycle, organizations can develop systems that are resilient, trustworthy, and aligned with ethical and regulatory standards. For this be truely effective, your organization will need experts in AI, Safety and data sciences.

Governance ensures that AI functions not only as intended but also meets regulatory expectations, fostering trust among stakeholders and end-users. As AI technologies continue to evolve, so too must our governance strategies. Organizations that embrace cLCG will not only mitigate risks and enhance operational efficiency but also position themselves as leaders in the responsible and sustainable deployment of AI. Since AI in GxP is at a very nascent stage, it is a perfect time to ensure guardrails are baked into every project all the time.