Table of Contents

1. Introduction

The age of real AI agents is here. Across industries, intelligent systems perform complex, multi-step tasks once requiring teams of experts.

As Ethan Mollick notes, the challenge is no longer whether AI can work — it’s how we should work with AI.

A recent OpenAI study showed domain experts collaborating with AI—having it draft first versions, then reviewing, correcting, and re-prompting—achieved results 40% faster and 60% cheaper than traditional workflows, while retaining full ownership of outcomes.

This hybrid model—humans steering, AI executing—defines the future of work. It’s not replacement; it’s augmentation.

2. From Knowledge Work to Regulated Work

At xLM, we bring this paradigm to demanding, compliance-driven life science companies.

In these fields, validation is the cornerstone of quality—but also one of the most manual, document-heavy, and time-consuming process. Engineers spend weeks creating user requirement specifications (URS), mapping them to design and test cases, executing protocols, and collecting audit evidence. The result: high cost, human error risk, and limited agility.

Continuous Intelligent Validation (cIV) reimagines this.

It’s not an automation script or document generator—it’s an AI-powered validation ecosystem that continuously monitors, validates, and optimizes digital systems throughout their lifecycle.

3. Inside Continuous Intelligent Validation

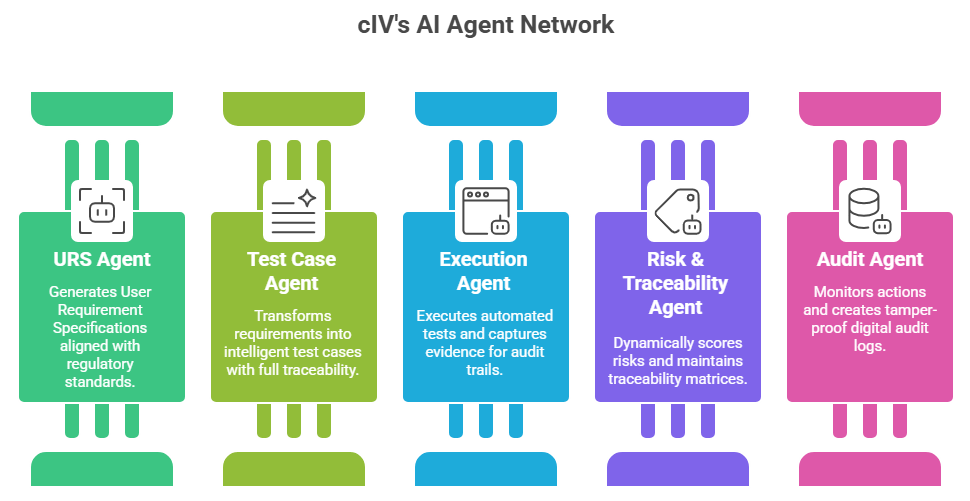

At the core of cIV lies a network of autonomous AI agents, each specialized for a key stage of the validation lifecycle:

URS Agent

Interprets process data, design documents, and regulatory standards to automatically generate User Requirement Specifications. It aligns with standards like Annex 11 and 21 CFR Part 11, ensuring every requirement is testable, traceable, and audit-ready.Test Case Agent

Transforms requirements into intelligent test cases. It builds risk-weighted test coverage and auto-links each case to its source requirement, maintaining full bidirectional traceability.Execution Agent

Conducts automated test execution in simulated or live environments, captures evidence (screenshots, logs, signatures), and compiles validated reports with built-in audit trails.Risk & Traceability Agent

Dynamically scores risks based on impact and historical performance. It continuously updates traceability matrices as systems evolve—a foundational step toward continuous compliance.Audit Agent

Monitors every action by AI and human users, creating tamper-proof digital audit logs. Each AI-generated artifact includes metadata identifying model version, confidence level, and human reviewer approvals.

4. Compliance by Design

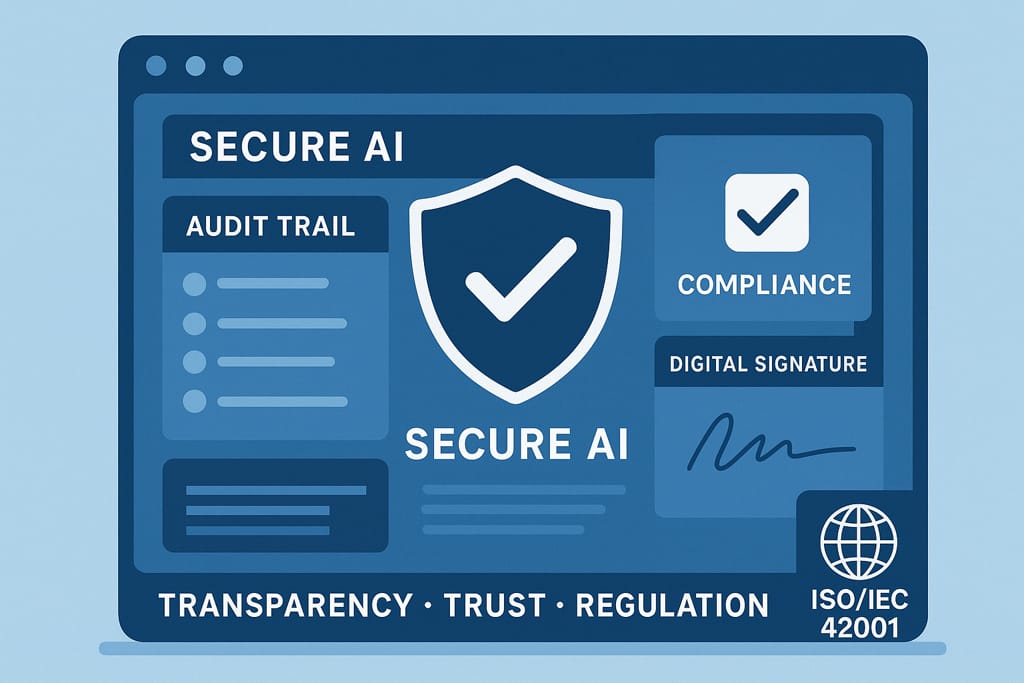

cIV is built on the principle of AI with governance. Every output is versioned, traceable, and reviewable—supporting full compliance with GAMP 5, Annex 11, Part 11, and emerging AI governance frameworks like ISO/IEC 42001 and Annex 22.

Unlike black-box automation tools, cIV ensures every AI decision is transparent. Validation engineers see how an agent derived a requirement or generated a test step, providing explainability for auditors and regulators.

This transparency bridges the gap between intelligent automation and regulatory confidence—something no other validation platform currently achieves.

5. Human-AI Collaboration in Validation

The human role in validation evolves.

With cIV, validation engineers become supervisors of intelligent systems, not document clerks. They review AI outputs, make risk-based decisions, and ensure contextual accuracy that only experience provides.

The workflow mirrors human-AI model:

Delegate structured tasks to AI (draft URS, generated test cases).

Review outputs, refine, and re-prompt if needed.

Override or complete tasks manually where nuance or judgment is critical.

This synergy drives efficiency and empowerment—freeing experts from repetitive documentation so they focus on design integrity, root cause analysis, and continuous improvement.

6. Beyond Validation: Continuous Intelligence in Action

Built on xLM’s Continuous Intelligence Platform, cIV’s validation insights feed directly into broader operational analytics:

Predictive Compliance: Identify risk trends before audits.

Digital Twin Integration: Link validation data with process simulations.

AI Lifecycle Management: Track model updates, retraining, and drift in AI-enabled systems under validation.

This transforms validation from a static event into a living, data-driven feedback system that evolves with your operations.

7. Why It Matters

Without intention, automation risks drowning us in AI-generated noise—endless content, reports, and evidence that say little about real quality.

The alternative is purposeful automation: using platforms like cIV to empower experts to do what they do best—think, assess, decide, and improve.

The goal isn’t to replace people.

It’s to enable them to work in ways previously impossible—faster, safer, and with greater insight.

8. The Future of Work in GxP

As AI evolves, regulated industries face a defining question:

Will we let AI dictate our work, or redesign work to make the best use of AI?

Continuous Intelligent Validation offers a compelling answer—where compliance, intelligence, and human judgment coexist seamlessly.