Table of Contents

1. Introduction: A signal, not a spike

Our recent LinkedIn Live, “GxP in the Age of AI: Real-World Perspectives from Pharma IT and Manufacturing,” resonated beyond expectations.

The session attracted 624 registrations, all organic. This led to 393 unique viewers and a healthy engagement rate for a technical, unscripted discussion on AI in GxP environments.

For a technical, unscripted discussion on AI and regulated manufacturing, these numbers indicate:

The industry no longer asks if AI belongs in GxP but how.

Hosted by xLM Continuous Intelligence, the conversation featured Mr. Bob Buhlmann, a pharma leader with over 30 years in consulting and senior roles at Amgen, now leading Digital Quality and Strategy at AstraZeneca.

This was not a theory-heavy talk. The focus was execution, what works, where organizations struggle, and how to stay inspection-ready while moving fast.

2. AI in GxP Manufacturing: From curiosity to capability

The conversation began with a key question: How is AI impacting GxP manufacturing today?

The consensus was clear: AI is present but adoption varies. Advanced analytics and automation grow common, but trust and intended use remain decisive. Technology is no longer the main limit; governance and clarity are.

A key distinction emerged:

AI as decision support is gaining traction.

AI as decision maker remains a regulatory red line.

This framing set the tone for the discussion.

3. Trust, validation, and the reality of “validated AI”

A pressing concern was whether AI systems, especially GenAI, can be trusted in validated environments.

The discussion highlighted an important nuance:

Validation does not require determinism

It requires controlled intended use, transparency, and evidence

AI systems fit validated frameworks if:

Their scope is clear

Outputs are reviewed by humans

Audit trails are complete and explainable

This mirrors existing practices, like junior engineers drafting URS or test cases that SMEs review and approve.

Related LinkedIn Chatter: AI VALIDATION ISN'T ARCHITECTURE. IT'S MEDICINE.

4. AI agents in core GxP processes: Hype vs Reality

The discussion moved to a practical segment:

AI agents embedded in GxP workflows.

Examples included:

Validation documentation generation (URS, Test Scripts, Trace Matrices)

CAPA analysis

Batch record review

Risk assessments (FMEA)

The key insight:

Value lies not in replacing humans but in restructuring workflows around agentic capabilities.

When AI agents act as accelerators with humans in the loop, organizations see:

10× timeline compression

Sub-3-month ROI

Improved consistency and auditability

Related LinkedIn Chatter:

5. Regulators and Inspections: What agencies actually ask

A recurring theme was regulatory engagement.

Contrary to fears, inspectors do not reject AI outright. They ask:

What is the intended use?

Where are decisions made?

What evidence supports the output?

How is risk controlled?

Unintended risk often arises not from AI itself but from how organizations describe it. Over-promising autonomy or vague language can create inspection signals.

The recommendation was simple:

“Be precise. Be boring. Be evidence-driven”

Related LinkedIn Chatter: From AI pilots to regulated scale: a blueprint for Pharma leaders

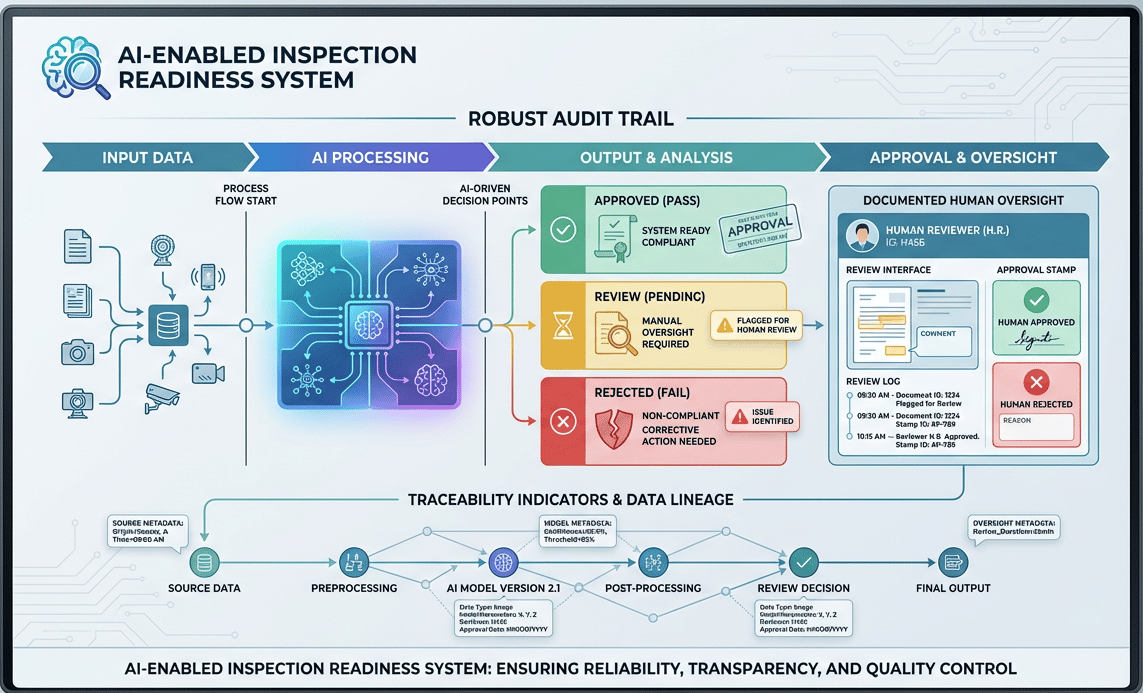

6. Inspection readiness for AI-enabled workflows

Inspection readiness in AI-enabled environments looks familiar but deeper.

What matters most:

Clear process boundaries

Documented human oversight

Robust audit trails

Traceability from input → output → approval

In some cases, AI-enabled systems improve inspection readiness by generating richer, more consistent evidence than manual processes.

Related LinkedIn Chatter - As AI plays a greater role in manufacturing, auditors will increasingly focus on evaluating these AI systems for compliance.

7. Real-world agentic use cases: Beyond slides and demos

Several concrete examples showed what “pragmatic AI” looks like:

Autonomous validation execution with xLM’s cIV, including audit trails, execution logs, and session recordings

Plug-and-play temperature mapping with xLM’s cTM and environmental monitoring with xLM’s cEMS using IIoT sensors with real-time analytics and anomaly prediction

Regulatory impact analysis, where new regulations trigger automated SOP reviews and redlining

Automated FMEA drafting, accelerating early-stage risk analysis

The takeaway was striking:

If organizations reimagine processes instead of automating old ones, efficiency gains of 10–20× are realistic.

Related LinkedIn Chatter: #093: Continuous Intelligent Validation (cIV): From Months of Manual Validation to Minutes of Intelligent Execution

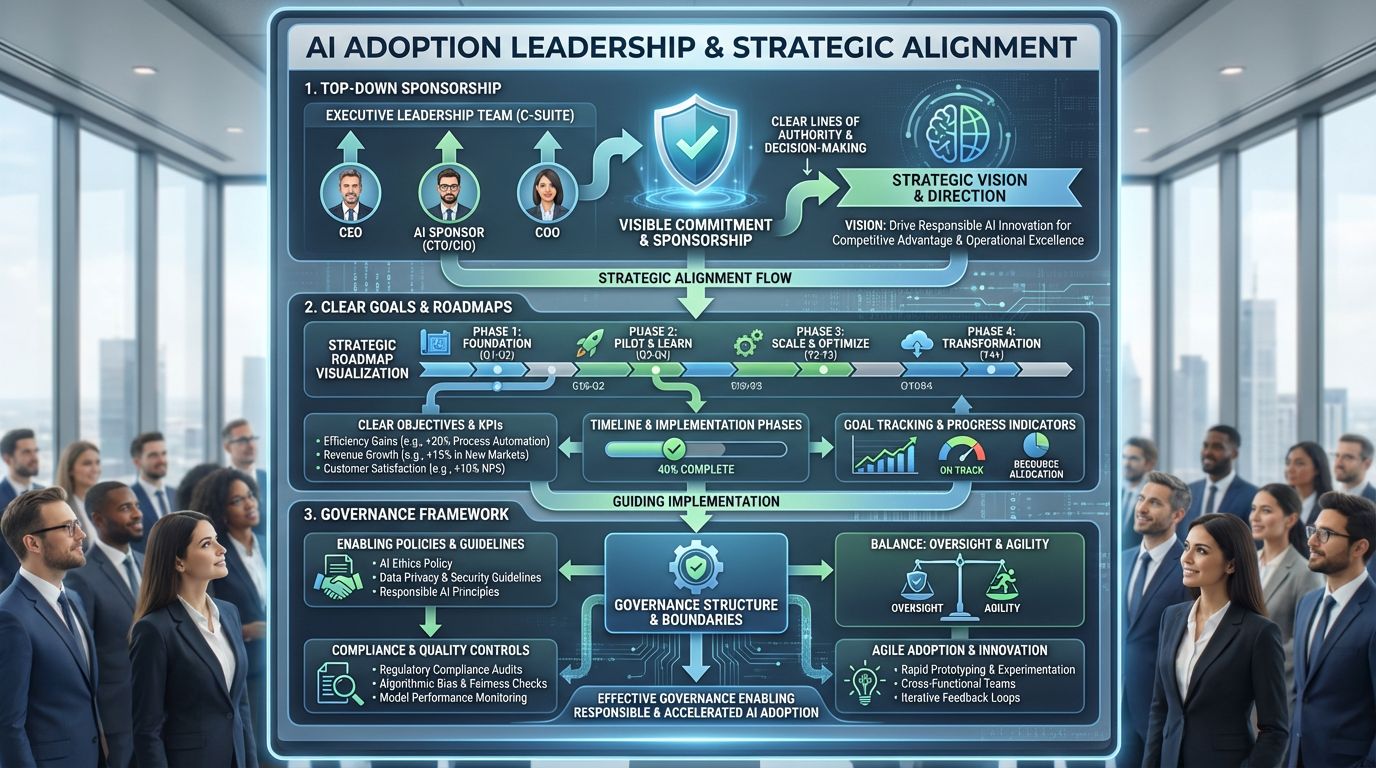

8. Leadership, governance, and organizational readiness

AI adoption cannot be delegated to IT or QA alone.

Successful implementations share three traits:

Top-down sponsorship

Clear goals and roadmaps

Governance that enables, not suffocates

Strong quality governance means better boundaries, not more paperwork.

Winning over skeptical stakeholders often starts with one thing:

“Show them working evidence, not strategy decks”

Related LinkedIn Chatter: #081: The GxP AI leadership framework: Align → Activate → Amplify → Accelerate → Comply (A4C)

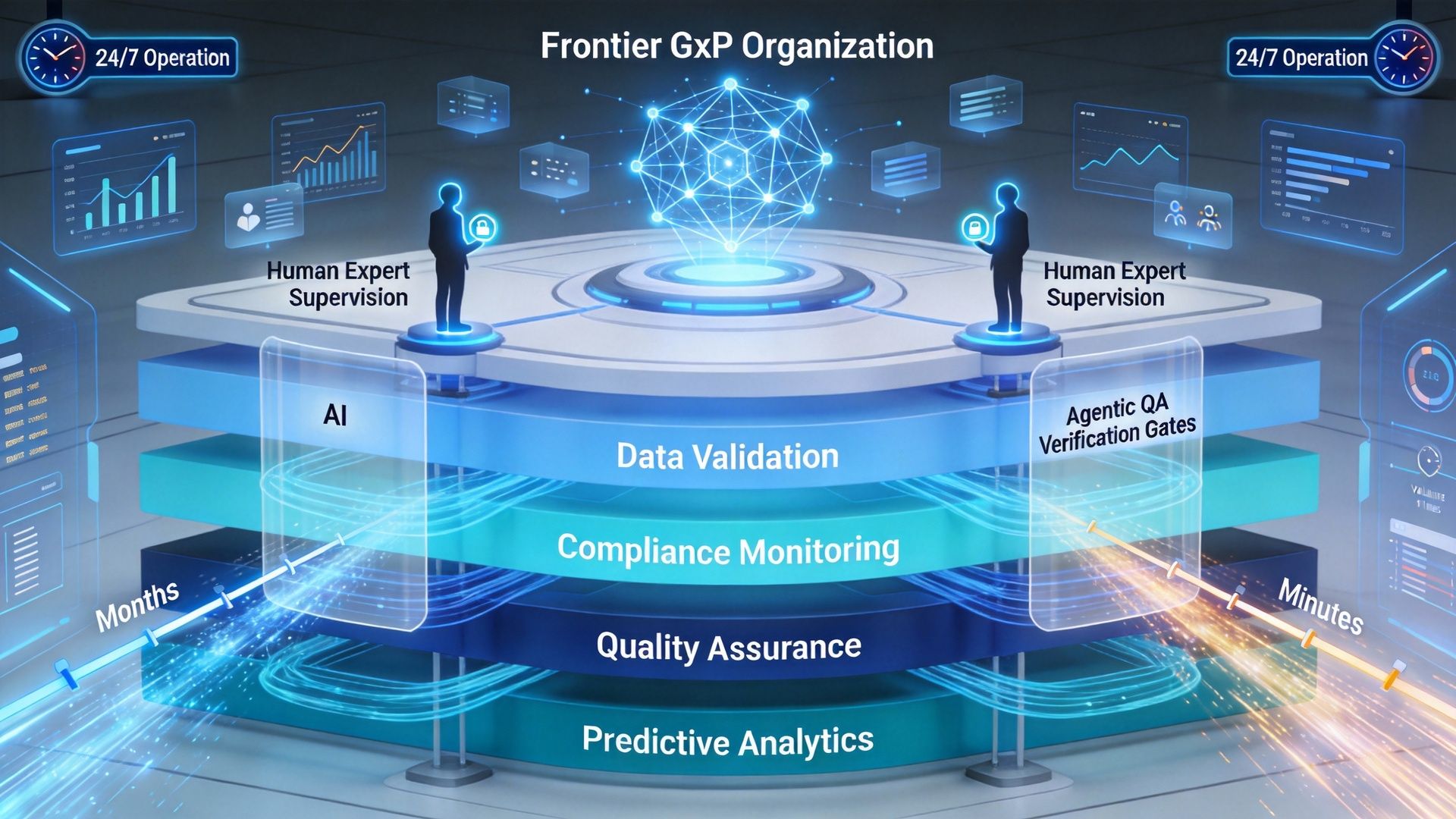

9. Frontier organizations: What’s coming next

The conversation closed by looking ahead.

A compelling vision emerged: Frontier GxP organizations powered by layers of AI agents working continuously, supervised by both:

Agentic QA layers

Human experts

Such organizations could operate 24×7, maintain continuous compliance, and compress validation timelines from months to minutes.

The technology exists.

The real question is organizational courage.

Related LinkedIn Chatter: How Pharma Can Transform Compliance? The Frontier Software Validation Organization Brings a Competitive Advantage.

10. Conclusion: Moving fast without losing control

If there was one message from this session, it was:

“You can move fast in GxP with AI but only if you are clear on intended use, governance boundaries, and inspection-ready evidence”

AI is no longer a future topic.

It is a present execution challenge and opportunity.

The winning organizations will not have the flashiest models but will combine pragmatism, quality discipline, and intelligent automation./