Credits: Stability.AI

Table of Contents

Introduction

Introducing Project Manava, a groundbreaking advancement in the field of GxP test automation. This project is set to transform the way we handle the entire validation lifecycle by incorporating state-of-the-art AI Agents.

At xLM, we acknowledge the increasing intricacy of software systems and the necessity for advanced software validation tools that can meet these evolving requirements. Our Project Manava presents a pioneering end-to-end solution that leverages AI capabilities to not just author your user requirements but also create and run test cases with exceptional precision and effectiveness. All this can be done with no or minimal human intervention. (Of course, to placate the QA folks, we will always keep Human-in-the-Loop as a fixture in our AI infused workflows).

The name "Manava" holds significant meaning and purpose behind its selection. In Sanskrit, "Manava" translates to "human" or "of the human kind," symbolizing the fundamental philosophy and vision driving the project. Just as humans demonstrate adaptability, intelligence, and innovation, Manava strives to embody these qualities within the domain of AI test automation.

Through Manava, we have established a new standard in automated testing, ensuring that our solutions not only meet but surpass the expectations of contemporary software development and GxP all at once.

Elevating Testing Standards: Our mission with Manava is to elevate the standards of automated testing. Through the application of sophisticated AI algorithms, we enhance test coverage, minimize false positives and negatives, and adapt seamlessly to evolving application environments. This commitment to innovation and precision reduces manual effort and boosts overall reliability, setting new industry benchmarks for effectiveness and performance.

Future-Ready Solution: Manava is crafted as an innovative solution that adapts alongside technological progress. Through the integration of AI across all facets of our test automation procedure, we guarantee the continued relevance and efficacy of our methods within the swiftly evolving realm of software development. Our forward-thinking strategy positions us at the vanguard of industry progress, guaranteeing that Manava not only fulfills present requirements but also foresees and addresses future obstacles.

Leveraging AI at xLM

To align with current industry trends and enhance our approach to test automation within Project Manava, we have integrated cutting-edge language models. This integration aims to streamline the creation of User Requirements Specifications (URS), automatically generate detailed test cases, and produce Test Plan Execution (TPE) Reports for our clientele.

The generated test cases are meticulously crafted based on the provided requirements, guaranteeing thorough coverage without the need for extensive manual intervention. This automation significantly reduces the likelihood of human error and enables us to promptly adjust to evolving requirements. Leveraging agentic workflows further elevates this process, empowering intelligent agents to independently oversee and execute test tasks, thereby ensuring the relevance and efficiency of our testing procedures.

Our utilization of AI-driven tools plays a pivotal role in enhancing our endeavors. These tools refine test cases, pinpoint edge cases, and propose novel scenarios. By amalgamating automation with intelligent management, we revolutionize conventional testing approaches into a more intelligent and automated framework.

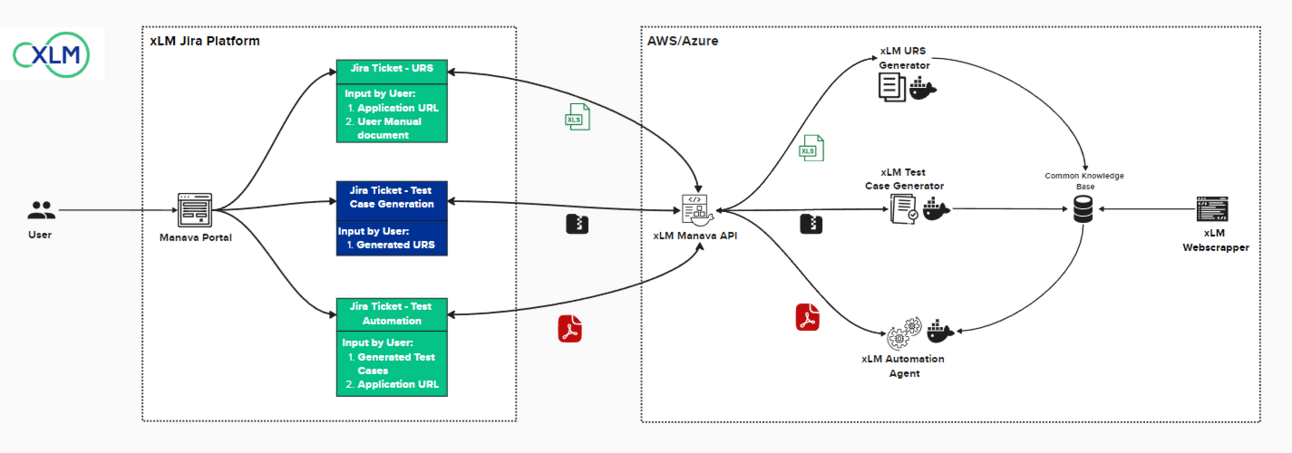

Architecture

Architecture of Project Manava

Manava is primarily composed of three parts, which are deployed as microservices on Azure:

URS Generation

Test Case Generation

Test Automation

These three components are accessible to customers through the xLM’s Service Desk Portal.

The Service Desk Portal offers a user-friendly interface for seamless user interaction. We guarantee that all customer-raised tickets are monitored and equipped with pertinent details like audit trails, ticket statuses and reports.

1. URS Generation

The process of URS Generation commences as customers upload their requirement files (For e.g.: user manuals, App URL, etc..) into our system. By utilizing cutting-edge language models and AI technologies, we automatically produce a comprehensive User Requirements Specification (URS). This method is crafted to transform user inputs into meticulously organized URS documents in a GxP compliant format, guaranteeing uniformity and accuracy.

URS Generation Workflow

Technologies and Methods Used

AI-Powered Language Models: Our URS Generation relies on advanced large language models (LLMs) to interpret context and generate human-like text accurately. These models play a crucial role in translating user requirements into formal specifications by providing relevant and concise descriptions.

Retrieval-Augmented Generation (RAG): To enhance the precision of our URS outputs, we implement Retrieval-Augmented Generation (RAG) techniques. This approach combines retrieval-based methods with generative models. Initially, our system retrieves pertinent information from a knowledge base and then utilizes LLM to create detailed specifications. By following this process, we ensure that our URS documents are both contextually appropriate and comprehensive.

Vector Databases for Efficient Retrieval: Our URS generation heavily relies on vector databases to support efficient similarity searches and information retrieval. These databases transform text into high-dimensional vectors, enabling quick access to relevant data from our knowledge base. This technology plays a vital role in facilitating the precise generation of URS content.

Service Desk Integration: The URS Generation service seamlessly integrates with the Service Desk Portal. This integration offers users an intuitive interface to submit their requirements and monitor the progress of their requests. By integrating with the Service Desk, we ensure efficient and transparent management of all customer interactions. Users receive real-time updates on ticket status and document generation progress.

The incorporation of vector databases, AI technologies, and RAG techniques into our URS Generation process greatly boosts efficiency and accuracy, while simultaneously minimizing manual labor. Through the automation of user requirements generation, we not only decrease manual work but also guarantee that the specifications adhere to stringent standards of quality and relevance.

Note: After the generation of URS, it is validated by a QA personnel to ensure that all requirements are covered and are as per the customers needs

2. Test Case Generation:

Once the User Requirement Specification (URS) is uploaded, the process of extracting the requirements commences. Each requirement is categorized into batches, which are then utilized to form a consolidated requirement.

These batched requirements are amalgamated based on similarities in their titles, workflow execution, and other relevant parameters. Subsequently, the consolidated requirement is leveraged to develop a single test case, which may encompass multiple scenarios.

To ensure the creation of precise and effective tests, the agent necessitates two key components:

The consolidated requirement along with their respective descriptions

A knowledge repository containing guidelines for various scenarios.

This knowledge base is created via a scrapper that crawls and scrapes data that is present in the SUT (Software Under Test) or its documentation.

Using the components mentioned above, we create detailed test cases in the form of .feature (gherkin) and web-action files.

The .feature files act as backups for the web-action files, which contain scripts for test automation. These files provide instructions for the Test Automation agent to interact with web page elements and execute the test case.

Test Case Generation Workflow

Technologies and Methods Utilized

CrewAI: CrewAI is a robust framework tailored for collaborative AI systems. It simplifies the development of AI applications that collaborate with human users, streamlining the process of deriving insights from user input. In our project, CrewAI assists in comprehending customer requirements to ensure that the generated test cases meet user expectations.

FastAPI: FastAPI is a contemporary, high-performance web framework for constructing APIs with Python 3.6 and above, based on standard Python type hints. It enables swift development and deployment of RESTful APIs. In our project, FastAPI forms the core of the application, facilitating seamless interactions between users and the test case generation engine.

Pandas: Pandas is a Python open-source library for data analysis and manipulation. It offers data structures like DataFrames, simplifying the handling of structured data. In our project, Pandas is used to manage and analyze customer requirements, transforming them into actionable test cases effortlessly.

EmbedChain: EmbedChain is a tool for developing applications that leverage embeddings for tasks such as search and recommendations. By integrating EmbedChain into our project, we enhance the contextual understanding of customer requirements, leading to more relevant and precise test case generation based on user inputs.

Azure Storage Blob: Azure Storage Blob is a service provided by Microsoft Azure for storing large volumes of unstructured data. It boasts high scalability and ensures secure data storage and retrieval. In our project, Azure Storage Blob is employed to manage and store uploaded customer requirements, guaranteeing data integrity and availability for subsequent processing.

3. Test Automation:

Once the test case (web actions) file is uploaded, the execution will commence. The execution will proceed as follows:

The web actions content is first read and parsed to extract instructions. These instructions are then sequentially provided to the agent.

The agent receives the instruction along with the current state of the web application, including the HTML content of the page and a screenshot.

Based on this data, the agent determines the next steps, repeating this process until the termination condition is satisfied.

Test Automation Workflow

Technologies and Methods Used

Lang-graph: Lang-graph is a robust tool for modeling intricate language structures. It aids in extracting relationships and dependencies from textual requirements, essential for understanding our customer's needs. Through LangGraph, we effectively analyze user requirements to ensure that the generated test cases align with the software's intended functionality and behavior.

Playwright: Playwright is a versatile automation library enabling developers to create reliable end-to-end tests for web applications. Supporting multiple browsers, it offers a comprehensive API for interacting with web elements. In our project, Playwright plays a vital role in executing test cases, mimicking user interactions, and validating the application's expected behavior. Its cross-browser support ensures thorough and comprehensive testing.

Walkthrough

1. URS Generation

To initiate the User Requirement Specifications (URS) generation process, users are required to submit a ticket with the following information:

URS Ticket Title: The title of the ticket intended for URS generation.

Description: A comprehensive description of the ticket.

Requirement File: A file that includes the necessary requirements for the URS.

2. Test Case Generation

To initiate the test case generation process, users need to submit a ticket containing the following details:

Test Case Ticket Title: The title assigned to the test case generation ticket.

Description: A detailed explanation of the ticket.

User Requirement Specification File: The file containing the previously generated and validated URS.

3. Test Automation

To commence the test automation process, users need to create a ticket including the following information:

Test Automation Title: The title assigned to the ticket for the execution of test automation.

Description: An elaborate explanation of the ticket.

Test Script File: The document that holds the test script generated and verified earlier.

A Sample Page from the Executed Protocol

Conclusion

With our latest AI based Continuous Validation platform (Project Manava), we are the first to accomplish end to end Software SDLC without any human intervention (Note: Human-in-the-Loop is always an option to make sure every deliverable is QA approved). Our Agentic Framework incorporates the latest AI technologies to automatically generate an URS, then test cases and finally execute the test cases. These steps take a few minutes to a couple of hours depending on the complexity of the software application under test.

Project Manava is a significant step in our roadmap to bring AI into Software CSV land. We are taking FDA’s CSA concept and guidelines to new heights with the help of Agentic AI.