Credits: ContinuousGPT for Continuous Smiles!

Table of Contents

1.0 Introducing ContinuousGPT

How many document repositories do you have to juggle just to find the relevant information? In a typical life science enterprise, it could be 10 or even more. It is a chore to query information that is hidden in legacy “brick style” 1990s applications. An industry that thrives on paper and documents, digital information retrieval from desparate sources must be efficient. Applying common sense tells us this must be the truth. Common sense in a regulated world is uncommon. In the name of regulation, every task is an onerous task. Even finding digital information at times is worse than rummaging through reams of good old paper.

Introducing ContinuousGPT. Where you can chat with your data irrespective of where it is stored and in what format. ContinuousGPT combines advanced natural language processing (NLP) techniques with robust document retrieval systems, allowing you to interact with vast datasets through conversational queries. By shifting AI-driven chatting, you can streamline workflows, improve decision-making, and ensure that critical information is readily accessible.

ContinuousGPT can integrate with platforms like OneDrive, Teams, Confluence, SharePoint, Veeva and more, revolutionizing how you interact with digital information. Think “chat” and not “clicks” and “frowns”! Needless to say, ContinuousGPT is delivered “validated”!

2.0 Why ContinuousGPT?

When there is ChatGPT, why do I need ContinuousGPT? The answer is simple:

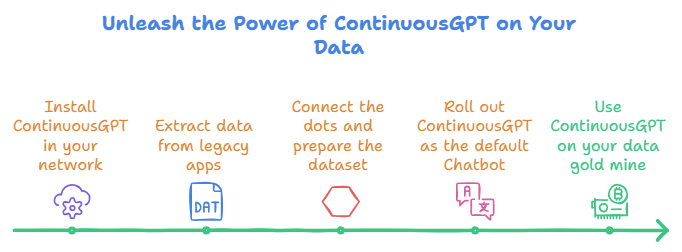

ContinuousGPT can be installed in your network ensuring that your data stays within your IT boudaries.

Extracting data from 1990s “brick style” apps so that an AI agent can make quick sense needs some heavy lifting. We can do that for you.

Connecting the dots to prepare the dataset for training also needs some heavy lifting. We can use various techniques like knowledge graphs to build meaningful relationships between desparate databases can be handled by our team.

ContinuousGPT will be rolled out as the default Chatbot for all our products and services. We can use the same tech stack for your data gold mine.

3.0 Features

Azure AD Authentication: Users can securely log in through Microsoft Azure Active Directory (AD) for seamless access.

Platform-Specific Chat Agent: Users can connect to chat agents dedicated to specific platforms like (OneDrive, Confluence, SharePoint, Veeva, etc..).

Start New Chat: Users can easily initiate a new conversation with an AI agent trained on the historical data.

Conversational Responses: Users can interact with the AI agent and receive responses specific to the dataset and documents on the platform.

Stored Chat History: All user conversations are stored server-side, ensuring continuity and reference for future use.

Chat History View: Users can view all previous conversations similar to the ChatGPT interface.

Continue Previous Chats: Users can pick up and continue any prior conversation from the chat history at any time.

Citations: The chatbot's response will also contain a citation to the document from which the data has been extracted.

4.0 Architecture

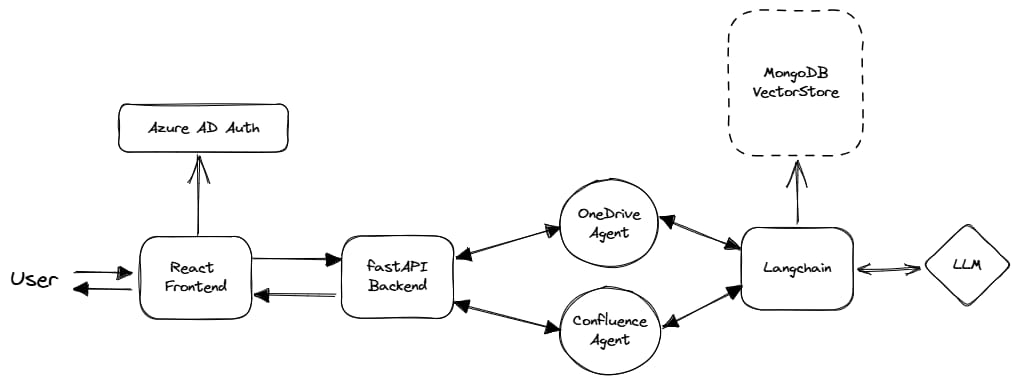

High Level Architecture

4.1 RAG (Retrieval-Augmented Generation)

RAG (Retrieval-Augmented Generation) is a groundbreaking approach in the realm of generative AI that integrates external knowledge sources with large language models (LLMs) to enhance response accuracy and reliability. By connecting LLMs to authoritative knowledge bases, RAG enables AI models to provide more precise and up-to-date information to users, fostering trust through source attribution and citations.

This innovative technique offers developers greater control over chat applications, allowing for efficient testing, troubleshooting, and customization of information sources accessed by the AI model. RAG is transforming various industries by enabling AI models to interact with diverse knowledge bases and deliver specialized assistance tailored to specific domains.

4.1.1 Why RAG?

RAG is favored over training traditional Large Language Models (LLMs) due to its significant advantages. RAG enhances LLM performance by delivering more accurate and relevant information, especially within their training domain, surpassing the capabilities of conventional LLMs. The ability of RAG to access a wide array of external data sources ensures responses are current and detailed, eliminating the risk of outdated or inaccurate information common in traditional models.

Moreover, RAG allows for customization and fine-tuning, enabling models to be tailored to specific needs, domains, languages, or styles that were previously challenging for traditional LLMs to address effectively. By seamlessly integrating external knowledge sources, RAG models offer responses that are not only factually correct and up-to-date but also more accurate, detailed, and contextually relevant compared to their traditional counterparts.

5.0 User Interface (UI)

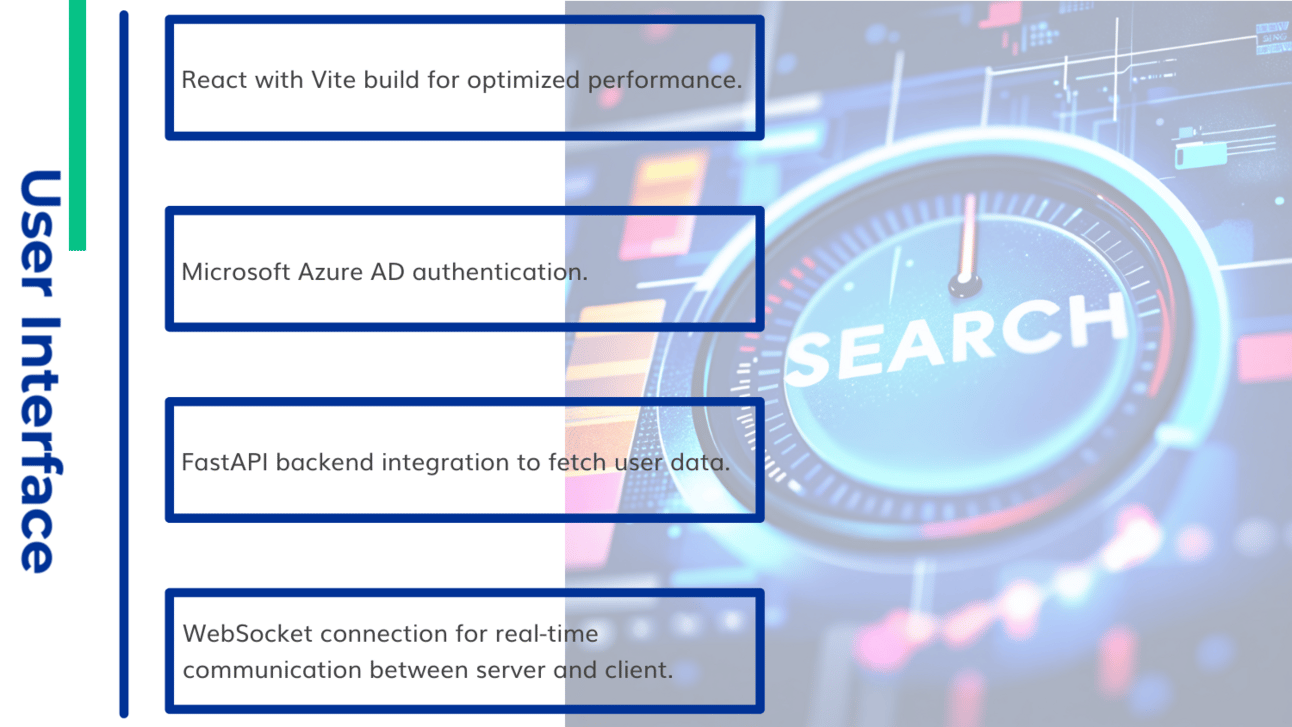

The frontend of Intranet Chatbot is built using React, optimized with a Vite build for production, ensuring faster performance and efficient asset bundling. The application utilizes React's state management to maintain session data, manage chat history, and facilitate smooth navigation between chats. Authentication is handled through Microsoft Azure AD, providing secure login and access to the application. For data retrieval, the frontend communicates with a FastAPI backend API to fetch user-specific data and information. Additionally, a WebSocket connection between the client and server is used to send and receive messages in real-time, ensuring a dynamic and responsive chat experience.

UI Key Features

6.0 Application Logic

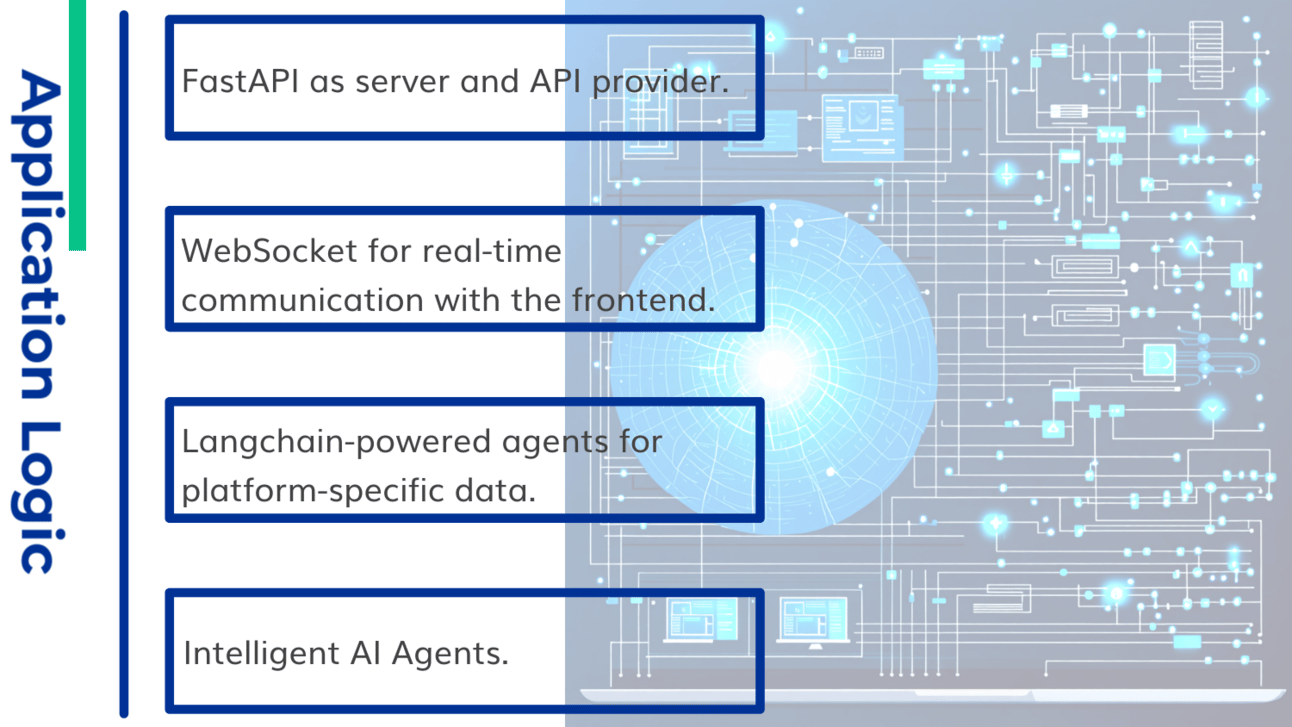

The backend of the Chatbot is powered by FastAPI, serving as both the server and API, facilitating smooth communication with the frontend. WebSockets are employed for real-time interaction, enabling seamless chat communication between the user and the system. The backend uses Langchain to create intelligent agents for each data platform, such as OneDrive and Confluence, ensuring that the information retrieved is relevant and platform-specific. User permissions are carefully handled to restrict access to agents based on predefined roles.

App Logic Key Features

7.0 Deployment

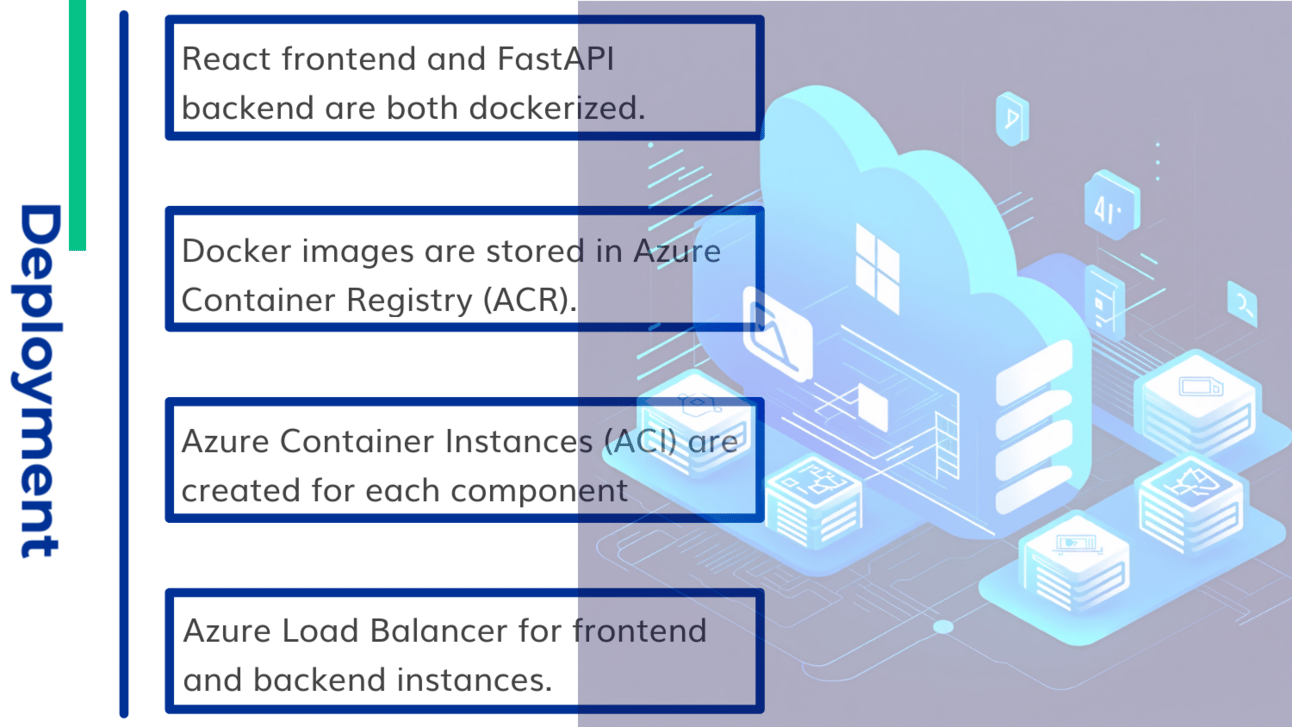

The deployment strategy involves containerizing both the React frontend and the FastAPI backend using Docker. These Docker images are stored in Azure Container Registry (ACR), which acts as a centralized repository for the application's containers. For hosting, Azure Container Instances (ACI) are created separately for both the frontend and backend services, ensuring that they run independently in isolated environments. To manage communication between the two, an Azure Load Balancer is set up, allowing the frontend instance to effectively route requests to the backend API, ensuring smooth data flow and responsiveness.

Deployment Key Features

8.0 Graphs and Knowledge Graphs for Data Retrieval

Graphs and knowledge graphs play a vital role in enhancing data retrieval and management efficiency. Utilizing a graph structure to represent data enables seamless modeling and querying of relationships between different entities.

8.1 Graph-based Data Management Systems

Graph-based data management systems utilize a graph representation scheme to enhance the efficiency of data retrieval. In these systems, data is stored as nodes (entities) and edges (relationships), enabling swift data traversal and querying.

8.2 Knowledge Graphs

Knowledge graphs represent knowledge in a structured manner, comprising entities (nodes) and relationships (edges). These graphs serve various purposes such as semantic search, similarity search, and retrieval-augmented generation (RAG). Knowledge graphs can be indexed and queried with high efficiency.

8.3 Graph Query Languages and Interfaces

Various graph query languages and interfaces have been created to facilitate the querying of graph-structured data:

SPARQL: Specifically designed for RDF graphs commonly utilized in knowledge graphs

Visual graph query interfaces: Enable users to construct queries through a visual representation of graph patterns

These tools significantly enhance user experience by simplifying the process of exploring and extracting pertinent data from graph databases.

8.4 Combining Graphs with Language Models

The fusion of graph-structured data with large language models (LLMs) to enhance information retrieval and generation tasks:

Knowledge graph creation and completion employing LLMs

Retrieval-augmented generation (RAG) systems, which retrieve pertinent subgraphs to enhance language model results

Graph-based RAG methodologies that utilize the structured nature of graphs for comprehensive summarization and retrieval focused on queries

Through the integration of graphs and LLMs, these systems offer robust and adaptable approaches for querying and reasoning across extensive knowledge repositories.

9.0 Conclusion

9.1 Unleash the Power of Your Data with ContinuousGPT

In today's fast-paced life science enterprise, the ability to quickly access and leverage critical information is paramount. ContinuousGPT emerges as a game-changing solution, transforming how you interact with your vast data repositories.

9.2 Seamless Integration, Unparalleled Efficiency

By integrating with platforms like OneDrive, Teams, Confluence, SharePoint, and Veeva, ContinuousGPT breaks down information silos, allowing you to chat with your data regardless of its location or format. This seamless integration means you can say goodbye to the frustration of juggling multiple document repositories and struggling with outdated "brick style" applications.

9.3 Security and Compliance at the Forefront

ContinuousGPT understands the unique needs of regulated industries. Delivered "validated" and installable within your network, it ensures your sensitive data remains secure within your IT boundaries. This commitment to data protection allows you to harness the power of AI-driven chatting without compromising on compliance.

9.4 Advanced Technology, Simplified Experience

Leveraging cutting-edge technologies like RAG (Retrieval-Augmented Generation) and knowledge graphs, ContinuousGPT offers unparalleled accuracy and relevance in its responses. Yet, for users, the experience is refreshingly simple – think "chat" instead of "clicks" and "frowns".

Make the smart choice for your enterprise. Choose ContinuousGPT – where your data speaks, and success listens.

10.0 Latest AI News

12.0 FAQs

Question | Answer |

|---|---|

1. What is ContinuousGPT? | ContinuousGPT is an AI-powered chatbot designed for the life sciences industry. It allows users to interact with data from various sources within their network through a conversational interface, eliminating the need to navigate multiple systems and applications. |

2. How is ContinuousGPT different from ChatGPT? | While both utilize AI for conversations, ContinuousGPT focuses on securely accessing and querying data within a company's IT infrastructure. It can be installed on your network, ensuring data privacy and compliance. Additionally, it's specifically designed to handle data extraction and analysis from legacy systems common in the life sciences. |

3. What are the key features of ContinuousGPT? | ContinuousGPT boasts features like: 🪢Azure AD Authentication: Secure login and access control. 🪢Platform-Specific Chat Agents: Dedicated agents for different platforms like OneDrive, Confluence, SharePoint, and Veeva. 🪢Conversational Responses: Human-like interaction with data retrieval. 🪢Stored Chat History: Review past conversations and continue previous chats. |

4. How does ContinuousGPT ensure data security and compliance? | ContinuousGPT prioritizes data security and compliance by: 🪢Being installable within your network, keeping data within your IT boundaries. 🪢Utilizing Azure AD Authentication for secure access control. 🪢Complying with relevant regulations for the life sciences industry (GxP). |

5. What is RAG and how does it benefit ContinuousGPT? | RAG (Retrieval-Augmented Generation) enhances ContinuousGPT by connecting it to external knowledge bases. This allows the AI to provide more accurate, up-to-date, and contextually relevant information than traditional language models. |

6. How does ContinuousGPT utilize graphs and knowledge graphs? | ContinuousGPT leverages graph-based data management systems and knowledge graphs to efficiently represent and query relationships between data points. This allows for more comprehensive and insightful data retrieval, enhancing the accuracy and depth of the chatbot's responses. |

7. What are the deployment options for ContinuousGPT? | ContinuousGPT utilizes a containerized deployment strategy using Docker, Azure Container Registry, and Azure Container Instances. This ensures scalable and independent operation of the frontend and backend services. |

8. How does ContinuousGPT simplify data access for life science enterprises? | ContinuousGPT simplifies data access by providing a user-friendly chat interface that eliminates the need to navigate complex systems and applications. It offers seamless integration with various data sources, enabling quick and easy retrieval of critical information while maintaining data security and compliance. This ultimately streamlines workflows, enhances decision-making, and improves overall efficiency within life science organizations. |