Table of Contents

1. Introduction

What the State of Enterprise AI 2025 Means for Validated AI. Over the last five articles in this series, we have traced a clear path:

From AI ambition to validated execution

From experimentation to compliance-first acceleration

From vision decks to audit-ready outcomes

From isolated pilots to AAA as pharma’s execution layer

Now, broader enterprise data has caught up.

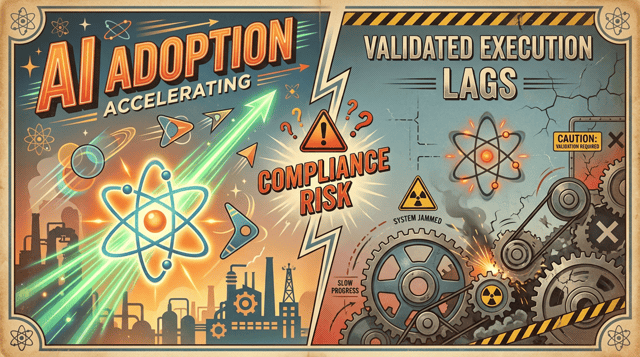

OpenAI’s “State of Enterprise AI 2025” report provides the most comprehensive real-world evidence yet of AI use at scale inside organizations. More importantly, it reveals a critical fault line for regulated industries:

“AI adoption is accelerating rapidly but validated, governed execution lags behind”

For GxP organizations, this gap is not just about maturity. It is a compliance, quality, and business risk issue.

2. Enterprise AI has entered the infrastructure phase

According to the OpenAI report:

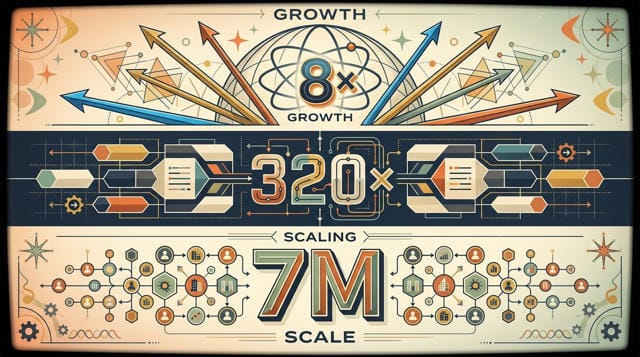

Enterprise AI usage grew 8× year-over-year

API reasoning workloads expanded 320× per organization

More than 7 million enterprise users now rely on AI daily

Custom GPTs and structured workflows are replacing ad-hoc prompting

This marks a fundamental shift.

AI is no longer a “tool” or an “experiment.” It is becoming core enterprise infrastructure.

Infrastructure in regulated environments must not be opaque, unvalidated, untraceable, or ungoverned. This is where most enterprise AI programs, especially in life sciences, begin to fracture.

3. The productivity signal is real but it’s not the whole story

The OpenAI report shows compelling productivity gains:

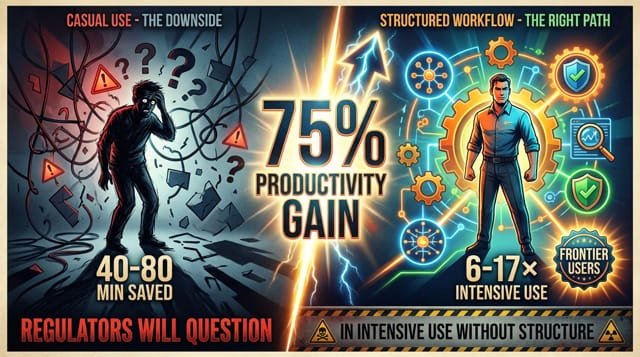

75% of workers report faster or higher-quality output

40–80 minutes saved per user per day

Engineers, analysts, and quality-adjacent roles show the highest gains

However, the report reveals something more important for GxP leaders:

The largest gains occur only when AI is deeply embedded into structured workflows not when used casually.

Frontier users:

Use AI 6–17× more intensively

Engage across multiple task types

Consume advanced reasoning, analytics, and automation features

In regulated industries, “intensive use” without structure is exactly what regulators will question.

4. Why GxP organizations cannot follow the general enterprise playbook

Most enterprises tolerate disruptions or change within their operations

Inconsistent usage leads to unpredictable behavior and prevents demonstrating repeatability during audits or inspections.

Informal prompt engineering introduces undocumented logic into decision-making, which cannot be reviewed, validated, or reproduced reliably.

Limited documentation prevents proving intended use, risk controls, and system behavior when challenged by regulators.

Retrospective controls detect issues only after they occur, increasing compliance breaches and delayed corrective actions.

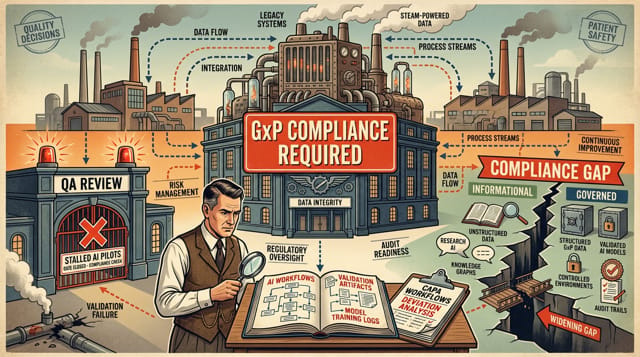

GxP organizations must follow strict regulations when AI affects these operations.

Quality decisions influenced by AI must be explainable and evidence-backed, as they affect product safety and patient outcomes.

Validation artifacts generated or supported by AI must be traceable, consistent, and aligned with approved validation strategies.

Deviation analysis involving AI requires transparent logic to justify root-cause conclusions and corrective actions.

CAPA workflows supported by AI must show controlled recommendations and documented human oversight.

Regulatory submissions containing AI-derived insights must withstand scrutiny on data integrity, methodology, and decision rationale.

Clinical or manufacturing outcomes influenced by AI demand the highest control level, as errors can impact patient safety and product quality.

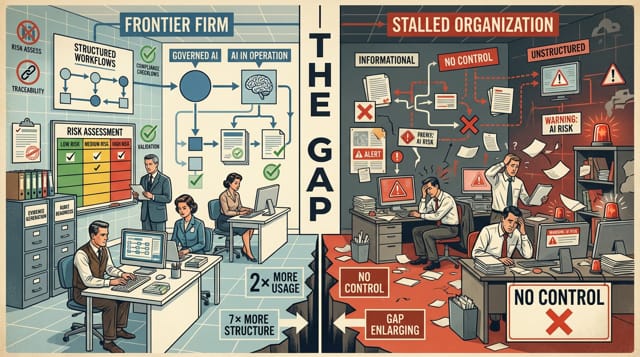

The OpenAI report highlights a widening gap:

Many enterprise users never activate reasoning, analysis, or search

Even fewer organizations implement standardized AI workflows

Only a small percentage treat AI as a governed system

For pharma and life sciences, this gap results in:

AI pilots that stall at QA review

Automation that cannot be validated

Innovation teams running ahead of compliance

Quality teams reacting instead of leading

5. Validated AI is the missing layer in enterprise AI adoption

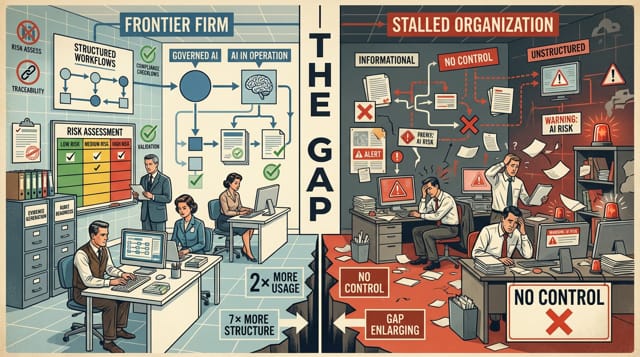

One of the most important signals in the OpenAI report is not adoption, it is variation.

The report repeatedly shows:

Frontier firms generate 2× more AI usage per seat

They use 7× more structured workflows

They invest in governance, evaluation, and standardization early

In other words:

The winners are not using “more AI.”

They are using AI more deliberately.

This is precisely the gap that validated AI execution fills.

Validated AI means:

Define the system's purpose clearly so all stakeholders understand its scope, guiding development and compliance.

Use risk assessments to prioritize controls, focusing resources on the biggest risks to improve safety and compliance.

Ensure all data, models, and decisions are fully traceable and auditable to support transparency and accountability.

Make evidence generation part of routine work to naturally produce documentation and proof of compliance, reducing extra effort.

Align systems and processes with GxP standards from the start to ensure compliance, avoid costly fixes, and promote quality culture.

6. Why AAA aligns with the reality of enterprise AI in 2025

The OpenAI report confirms what regulated industries already experience:

AI scale is no longer the bottleneck. The capacity to scale AI systems has improved significantly, removing previous limits that slowed development and deployment.

Models are no longer the bottleneck. AI model performance and availability have advanced so they no longer restrict project speed or efficiency.

Tools are no longer the bottleneck. Software and platforms have evolved to support our needs effectively, eliminating previous constraints.

Organizational readiness and validation are the bottlenecks. xLM’s AAA (Audit–Automate–Accelerate) framework addresses this reality.

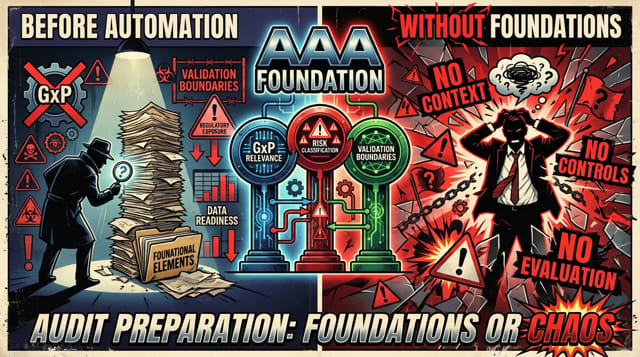

6.1. Audit

Before automation begins, AAA establishes foundational elements necessary for the process

GxP relevance is determined by whether and how an AI use case impacts regulated processes, product quality, patient safety, or compliance obligations.

Risk classification defines AI system criticality based on intended use, potential failure modes, and impact on GxP outcomes.

Validation boundaries specify which components, decisions, and workflows require validation and which fall outside regulatory scope.

Regulatory exposure is assessed by identifying applicable regulations, guidance, and inspection expectations for the AI-enabled process.

Data and process readiness ensures source data quality, process stability, and operational controls support validated AI use.

This aligns with the report’s finding that many enterprises deploy AI without enabling context, controls, or evaluation.

6.2. Automate

Automation streamlines repetitive tasks and processes to improve efficiency and reduce manual intervention.

Repeatable workflows ensure AI-assisted processes behave consistently across executions, supporting reproducibility and audit defensibility.

Embedded controls integrate risk checks, approvals, and safeguards directly into automated workflows instead of relying on manual oversight.

Human-in-the-loop decision points preserve accountable decision-making by requiring qualified personnel to review, approve, or override AI outputs where GxP impact exists.

Evidence generation produces validation-ready records, logs, and artifacts automatically as part of normal operation.

Explainability and traceability allow regulators and quality teams to understand how inputs were processed, decisions reached, and outcomes produced.

This mirrors the report’s observed shift toward structured AI workflows and reusable systems, not ad-hoc usage.

6.3. Accelerate

Acceleration is not speed for its own sake. It represents purposeful momentum directed toward meaningful progress. It is:

Faster validation reduces time to establish fitness for use while preserving rigor, documentation, and controls expected in regulated environments.

Faster audit readiness ensures traceability, evidence, and documentation are maintained continuously, not created reactively under inspection.

Faster regulatory confidence builds when AI workflows consistently demonstrate transparency, control, and compliance throughout their lifecycle.

Faster business impact without compliance debt delivers value quickly while avoiding rework, remediation, or regulatory risk from governance or validation shortcuts.

The OpenAI data shows depth of use matters more than access. AAA ensures depth without risk.

7. Conclusion — 2026 is not the year of “more AI,” it is the year of defensible AI

The OpenAI report makes one thing clear:

”AI is now embedded across industries, geographies, and functions.”

For GxP organizations, the differentiator will not be:

Who adopts AI first

Who deploys the largest models

Who automates the most tasks

The differentiator will be:

Who can prove their AI is fit for purpose, controlled, and compliant at scale.

Validated AI is no longer a future concept. It is the execution requirement of the enterprise AI era.

In regulated industries, execution without validation is not innovation; it is exposure.